Lately "Virtual Reality" or "VR" in short is being turned into this next big thing so I decided to write about my past and present experience with it.

Mostly I am going to show off my cool toys here :D and also share some maybe interesting insights and experiences I had with them.

Color filters

When I was around 15 in 2005, I've been dreaming about building a realistic simulator for gaming, consisting of a treadmill and two displays in front of the eyes covering my complete field of view and I never really gave up on that.

So a first step into that direction were 3D glasses using color filtering, because I couldn't effort shutter glasses which at that time only worked with CRT monitors and weren't produced anymore.

Color filtering glasses, ideally using red and cyan did support some color and were even supported by some early NVIDIA stereo drivers which kinda worked with some games. The first game I spend a lot of time with in 3D was TES: Oblivion and it was awesome. Sure shadows didn't really work and neither did reflections and the colors were a bit off, but that was a tradeoff I was willing to take.

At that same time I also started getting into game development and computer graphics using Gamestudio and I spent a lot of time integrating stereo rendering for those red/cyan glasses into everything I created.

My experience with these is that they do work best with greyscale images, but do also allow for limited colors if you accept some ghosting.

My usual approach was to just take the red channel of one eyes image and the green and blue channels of the other one. Which might fail if there is no red in the image or if it is all red.

The red feels darker than the cyan which causes some inbalance between the eyes which can feel a bit uncomfortable.

This could probably be improved by making things a bight brighter and maybe blending in some of the color from the greyscale images if the image is lacking a specific color channel. I didn't think of that though when I made it and was quite happy with what I had.

Something I did experiment with was the angle of the cameras and their distance. Too much distance or too much of an angle makes looking at the images a lot more exhausting or even breaks the experience at some point. The distance of the camera or eyes should ideally match that of your real eyes within the scale of the game world, more distance makes the stereo effect stronger and and less makes it weaker. The angle defines which distance comes out of the screen and which goes beyond it, because thats where two rays emitted from the cameras into their looking direction would cross. I usually made the cameras look into the exact same direction placing the sceen plane in an endless distance. It tends to be a bit strange but seemed to work better for me.

Two things you should never do in my opinion is to cutoff things coming out of the screen at the edges (lots of movies still do this even when they don't have to) and convert 2D images to stereo, while it somewhat works it is always an inferior experience.

During this time I also got pretty good at staring at side by side stereo images with parallel or cross view :P.

Then avatar came out and people started talking about this 100 years old technology as if it was something totally new and modern...

Head tracking

Something that did inspire me a lot was this video:

And as a result I bought a wii remote, but never used it for head tracking. What I did do though was create my own "bright light tracking" using a webcam and a flash light:

I still think that the concept of controlling the character by looking into the direction you want it to go is quite promising.

my3D

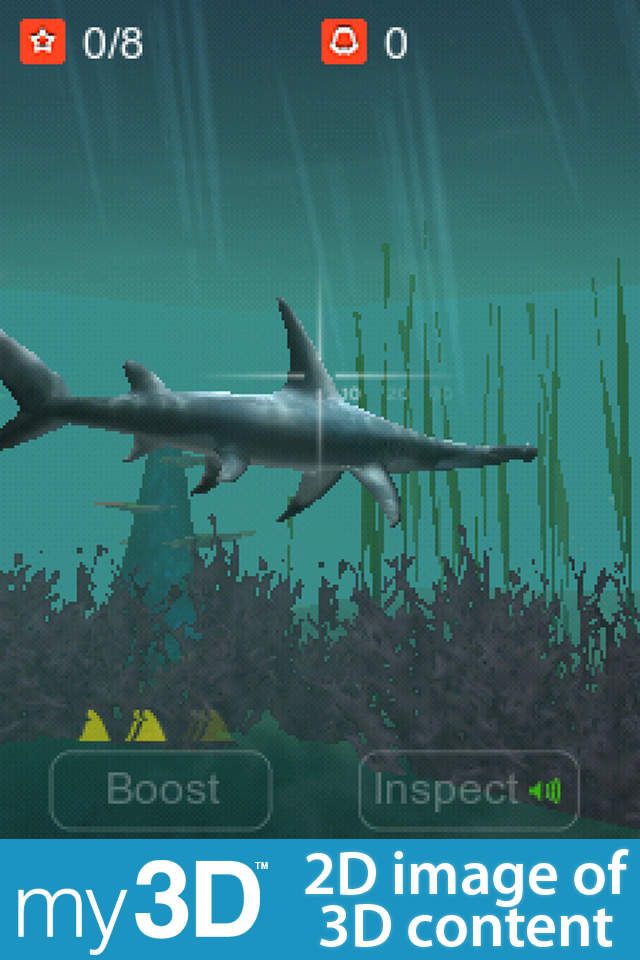

Somewhen in 2009(?) I was asked to help out with some shaders for an iPhone game made with Unity (yes, it was even worse back then, than it is now) for Hasbros' my3D. my3D was a cheap ($25) viewer for 3D content on iPhone and iPod Touch which was not very successful and taken off the market only a few month after their US release. They probably regret that decision by now...

Especially with an iPhone 4 and 4s and their retina screens this could have been great if only the content was good. The "game" I was involved in "my3D 360 Sharks" was not that bad but could have been a lot better in my opinion. Performance was bad at low resolutions and the graphics should have been a bit more colorful with maybe less fancy effects in some places and more fancy ones in others.

You used your thumbs on the screen for some input and could turn around using the gyroscope.

Later I made my own experiment with my3D which was some kind of third person space ship racing game where you again could steer by looking where you wanted to go and I made it even work with my iPhone 3GSs accelerometer and magnetometer because it didn't have a gyroscope. Sure the quality wasn't perfect but much better than I expected after the internet and my colleagues kept telling me it wasn't really possible...

Oculus DK1

Then at some point there was this thing called kickstarter and this company called oculus which I totally missed.

But when the Oculus DK1 finally started shipping to backers I did realize that it was that thing I always wanted and preordered one. (I got a couple of really low resolution displays laying around because I planned on building something similar a couple of times, but never got around to actually do it, which is the only reason I didn't sell a VR company for a couple billion yet :P).

Somewhen in the beginning of August 2013 I got my Oculus DK1 and I loved it!

For about 20mins after which I had to take it off and wait for at least 2 hours to get my motion sickness into an acceptable state...

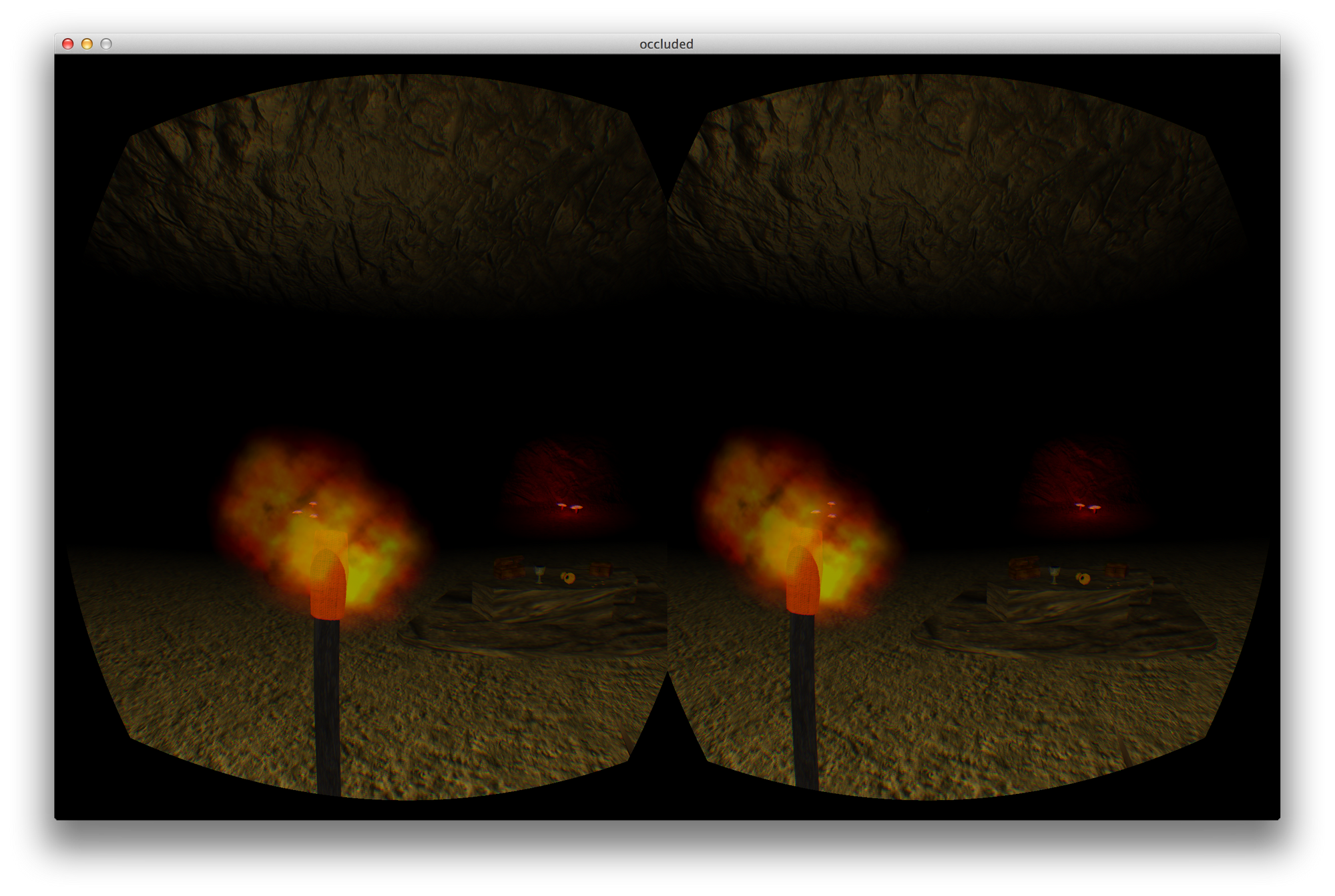

At the same time Oculus hosted a 4 weeks(?) game jam and @widerwille.com and I decided to create a game for it using our game engine Rayne which at that point was just starting to become usable. As a result we spent most time on the engine and not much time on the game, but we did end up with something that wasn't great but worked.

Since we integrated the oculus support ourselves it was possible to just start the game and it would automatically detect the oculus and start the game in fullscreen on the correct screen. Back then it was required to integrate the distortion shaders yourself and so we did including gamma correction at the correct time, which the latest oculus SDK just added too ;)

http://rayne3d.com/showcase/vrjam/screen01.png

I spend lots of time with just jumping off the one high cliff we got in our games cave...

Coloring the image red when taking damage did also work well.

http://rayne3d.com/showcase/vrjam/screen03.png

One of the most important things I learned at this time was that the first person controls working best for me is when you turn the player with your mouse or controller and have it walk into its own direction and then add the head tracking on top of that without effecting the movement. This is contrary to what oculus recommends, because they prefer to move the player always relative to where you are facing. But that way you can't just run in one direction and look around while running.

Something else that helped me with motion sickness was actually standing up and doing some running movements on spot.

Oculus DK2

A year later, in August 2014 I finally got my Oculus DK2. It came with positional tracking and a better screen and turned out to be a big step into the right direction of less motion sickness. Sure you can still see pixels which is a problem with small text, but if you can lean in to read the text it isn't really a big issue and it is totally possible to lose yourself in a game with it.

Motion sickness is still a problem in standard first person experiences, but others like Elite: Dangerous with lots of open space and some fixed frame around you work perfectly and I can play it for many hours without any problems.

Input devices

Speaking of E:D, I got a nice Joystick and pedals for Playing X-Plane and they are perfect for playing in VR because all buttons are easily reachable and since they look about the same as the ones in the space ships cockpit you sometimes forget that the hands in game aren't your real ones.

This is the experience that makes me convinced that VR works and does have a future if the games are a good fit.

At some point I got a Razor Hydra motion controller which uses a magnet field for position and rotation tracking and makes a quite powerful input device for room scale VR being able to track both of your hands and some buttons.

Unfortunately it is tethered, but so is the DK2.

I created a little tennis something game over a weekend for Ludum Dare with it. The idea is to transform the DK2 and the Hydras positions into the same coordinate system. This way moving around in the room has the same effect in the game and it just works. The Hydra isn't perfectly accurate and there can be some distortions in the tracking, but it is still a lot of fun. I recorded a video showing it in action:

Lately I have been experimenting with the Leap Motion and also a Kinect. Both naturally have issues with hands or other body parts being covered by objects or other body parts since they only have one point of view and while a Kinect would probably work as a quite solid replacement for the Hydra in the example above the Leap Motions field of view while being mounted on a DK2 might be too small to always track the hands.

I started writing a dirty DX12 renderer with Oculus and Leap Motion support, but didn't get past the basic rendering part yet.

I am considering a simple boxing prototype (punching a punching bag) and/or sandbox where you can shape things with your hands.

Another project I still want to spend some time on is a small scene with a realistic animated person and some minor interaction like talking, walking around and throwing a ball or whatever. While it does not feel real, it should make a very nice experience.

Future

Now I am really looking forward to the HTC Vive and the Oculus CV1 and their input devices. This better be awesome :).

Another thing I am really interested in is Microsofts HoloLens, unfortunately they only sell the devkits in US and Canada for now...